Saving MultiCellDS data from BioFVM

Note: This is part of a series of “how-to” blog posts to help new users and developers of BioFVM.

Introduction

A major initiative for my lab has been MultiCellDS: a standard for multicellular data. The project aims to create model-neutral representations of simulation data (for both discrete and continuum models), which can also work for segmented experimental and clinical data. A single-time output is called a digital snapshot. An interdisciplinary, multi-institutional review panel has been hard at work to nail down the draft standard.

A BioFVM MultiCellDS digital snapshot includes program and user metadata (more information to be included in a forthcoming publication), an output of the microenvironment, and any cells that are secreting or uptaking substrates.

As of Version 1.1.0, BioFVM supports output saved to MultiCellDS XML files. Each download also includes a matlab function for importing MultiCellDS snapshots saved by BioFVM programs. This tutorial will get you going.

BioFVM (finite volume method for biological problems) is an open source code for solving 3-D diffusion of 1 or more substrates. It was recently published as open access in Bioinformatics here:

http://dx.doi.org/10.1093/bioinformatics/btv730

The project website is at http://BioFVM.MathCancer.org, and downloads are at http://BioFVM.sf.net.

Working with MultiCellDS in BioFVM programs

We include a MultiCellDS_test.cpp file in the examples directory of every BioFVM download (Version 1.1.0 or later). Create a new project directory, copy the following files to it:

- BioFVM*.cpp and BioFVM*.h (from the main BioFVM directory)

- pugixml.* (from the main BioFVM directory)

- Makefile and MultiCellDS_test.cpp (from the examples directory)

Open the MultiCellDS_test.cpp file to see the syntax as you read the rest of this post.

See earlier tutorials (below) if you have troubles with this.

Setting metadata values

There are few key bits of metadata. First, the program used for the simulation (all these fields are optional):

// the program name, version, and project website:

BioFVM_metadata.program.program_name = "BioFVM MultiCellDS Test";

BioFVM_metadata.program.program_version = "1.0";

BioFVM_metadata.program.program_URL = "http://BioFVM.MathCancer.org";

// who created the program (if known)

BioFVM_metadata.program.creator.surname = "Macklin";

BioFVM_metadata.program.creator.given_names = "Paul";

BioFVM_metadata.program.creator.email = "Paul.Macklin@usc.edu";

BioFVM_metadata.program.creator.URL = "http://BioFVM.MathCancer.org";

BioFVM_metadata.program.creator.organization = "University of Southern California";

BioFVM_metadata.program.creator.department = "Center for Applied Molecular Medicine";

BioFVM_metadata.program.creator.ORCID = "0000-0002-9925-0151";

// (generally peer-reviewed) citation information for the program

BioFVM_metadata.program.citation.DOI = "10.1093/bioinformatics/btv730";

BioFVM_metadata.program.citation.PMID = "26656933";

BioFVM_metadata.program.citation.PMCID = "PMC1234567";

BioFVM_metadata.program.citation.text = "A. Ghaffarizadeh, S.H. Friedman, and P. Macklin,

BioFVM: an efficient parallelized diffusive transport solver for 3-D biological

simulations, Bioinformatics, 2015. DOI: 10.1093/bioinformatics/btv730.";

BioFVM_metadata.program.citation.notes = "notes here";

BioFVM_metadata.program.citation.URL = "http://dx.doi.org/10.1093/bioinformatics/btv730";

// user information: who ran the program

BioFVM_metadata.program.user.surname = "Kirk";

BioFVM_metadata.program.user.given_names = "James T.";

BioFVM_metadata.program.user.email = "Jimmy.Kirk@starfleet.mil";

BioFVM_metadata.program.user.organization = "Starfleet";

BioFVM_metadata.program.user.department = "U.S.S. Enterprise (NCC 1701)";

BioFVM_metadata.program.user.ORCID = "0000-0000-0000-0000";

// And finally, data citation information (the publication where this simulation snapshot appeared)

BioFVM_metadata.data_citation.DOI = "10.1093/bioinformatics/btv730";

BioFVM_metadata.data_citation.PMID = "12345678";

BioFVM_metadata.data_citation.PMCID = "PMC1234567";

BioFVM_metadata.data_citation.text = "A. Ghaffarizadeh, S.H. Friedman, and P. Macklin, BioFVM:

an efficient parallelized diffusive transport solver for 3-D biological simulations, Bioinformatics,

2015. DOI: 10.1093/bioinformatics/btv730.";

BioFVM_metadata.data_citation.notes = "notes here";

BioFVM_metadata.data_citation.URL = "http://dx.doi.org/10.1093/bioinformatics/btv730";

You can sync the metadata current time, program runtime (wall time), and dimensional units using the following command. (This command is automatically run whenever you use the save command below.)

BioFVM_metadata.sync_to_microenvironment( M );

You can display a basic summary of the metadata via:

BioFVM_metadata.display_information( std::cout );

Setting options

By default (to save time and disk space), BioFVM saves the mesh as a Level 3 matlab file, whose location is embedded into the MultiCellDS XML file. You can disable this feature and revert to full XML (e.g., for human-readable cross-model reporting) via:

set_save_biofvm_mesh_as_matlab( false );

Similarly, BioFVM defaults to saving the values of the substrates in a compact Level 3 matlab file. You can override this with:

set_save_biofvm_data_as_matlab( false );

BioFVM by default saves the cell-centered sources and sinks. These take a lot of time to parse because they require very hierarchical data structures. You can disable saving the cells (basic_agents) via:

set_save_biofvm_cell_data( false );

Lastly, when you do save the cells, we default to a customized, minimal matlab format. You can revert to a more standard (but much larger) XML format with:

set_save_biofvm_cell_data_as_custom_matlab( false )

Saving a file

Saving the data is very straightforward:

save_BioFVM_to_MultiCellDS_xml_pugi( "sample" , M , current_simulation_time );

Your data will be saved in sample.xml. (Depending upon your options, it may generate several .mat files beginning with “sample”.)

If you’d like the filename to depend upon the simulation time, use something more like this:

double current_simulation_time = 10.347; char filename_base [1024]; sprintf( &filename_base , "sample_%f", current_simulation_time ); save_BioFVM_to_MultiCellDS_xml_pugi( filename_base , M, current_simulation_time );

Your data will be saved in sample_10.347000.xml. (Depending upon your options, it may generate several .mat files beginning with “sample_10.347000”.)

Compiling and running the program:

Edit the Makefile as below:

PROGRAM_NAME := MCDS_test all: $(BioFVM_OBJECTS) $(pugixml_OBJECTS) MultiCellDS_test.cpp $(COMPILE_COMMAND) -o $(PROGRAM_NAME) $(BioFVM_OBJECTS) $(pugixml_OBJECTS) MultiCellDS_test.cpp

If you’re running OSX, you’ll probably need to update the compiler from “g++”. See these tutorials.

Then, at the command prompt:

make ./MCDS_test

On Windows, you’ll need to run without the ./:

make MCDS_test

Working with MultiCellDS data in Matlab

Reading data in Matlab

Copy the read_MultiCellDS_xml.m file from the matlab directory (included in every MultiCellDS download). To read the data, just do this:

MCDS = read_MultiCellDS_xml( 'sample.xml' );

This should take around 30 seconds for larger data files (500,000 to 1,000,000 voxels with a few substrates, and around 250,000 cells). The long execution time is primarily because Matlab is ghastly inefficient at loops over hierarchical data structures. Increasing to 1,000,000 cells requires around 80-90 seconds to parse in matlab.

Plotting data in Matlab

Plotting the 3-D substrate data

First, let’s do some basic contour and surface plotting:

mid_index = round( length(MCDS.mesh.Z_coordinates)/2 );

contourf( MCDS.mesh.X(:,:,mid_index), ...

MCDS.mesh.Y(:,:,mid_index), ...

MCDS.continuum_variables(2).data(:,:,mid_index) , 20 ) ;

axis image

colorbar

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('%s (%s) at t = %f %s, z = %f %s', MCDS.continuum_variables(2).name , ...

MCDS.continuum_variables(2).units , ...

MCDS.metadata.current_time , ...

MCDS.metadata.time_units, ...

MCDS.mesh.Z_coordinates(mid_index), ...

MCDS.metadata.spatial_units ) );

OR

contourf( MCDS.mesh.X_coordinates , MCDS.mesh.Y_coordinates, ...

MCDS.continuum_variables(2).data(:,:,mid_index) , 20 ) ;

axis image

colorbar

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('%s (%s) at t = %f %s, z = %f %s', ...

MCDS.continuum_variables(2).name , ...

MCDS.continuum_variables(2).units , ...

MCDS.metadata.current_time , ...

MCDS.metadata.time_units, ...

MCDS.mesh.Z_coordinates(mid_index), ...

MCDS.metadata.spatial_units ) );

Here’s a surface plot:

surf( MCDS.mesh.X_coordinates , MCDS.mesh.Y_coordinates, ...

MCDS.continuum_variables(1).data(:,:,mid_index) ) ;

colorbar

axis tight

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

zlabel( sprintf( '%s (%s)', MCDS.continuum_variables(1).name, ...

MCDS.continuum_variables(1).units ) );

title( sprintf('%s (%s) at t = %f %s, z = %f %s', MCDS.continuum_variables(1).name , ...

MCDS.continuum_variables(1).units , ...

MCDS.metadata.current_time , ...

MCDS.metadata.time_units, ...

MCDS.mesh.Z_coordinates(mid_index), ...

MCDS.metadata.spatial_units ) );

Finally, here are some more advanced plots. The first is an “exploded” stack of contour plots:

clf

contourslice( MCDS.mesh.X , MCDS.mesh.Y, MCDS.mesh.Z , ...

MCDS.continuum_variables(2).data , [],[], ...

MCDS.mesh.Z_coordinates(1:15:length(MCDS.mesh.Z_coordinates)),20);

view([-45 10]);

axis tight;

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

zlabel( sprintf( 'z (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('%s (%s) at t = %f %s', ...

MCDS.continuum_variables(2).name , ...

MCDS.continuum_variables(2).units , ...

MCDS.metadata.current_time, ...

MCDS.metadata.time_units ) );

Next, we show how to use isosurfaces with transparency

clf

patch( isosurface( MCDS.mesh.X , MCDS.mesh.Y, MCDS.mesh.Z, ...

MCDS.continuum_variables(1).data, 1000 ), 'edgecolor', ...

'none', 'facecolor', 'r' , 'facealpha' , 1 );

hold on

patch( isosurface( MCDS.mesh.X , MCDS.mesh.Y, MCDS.mesh.Z, ...

MCDS.continuum_variables(1).data, 5000 ), 'edgecolor', ...

'none', 'facecolor', 'b' , 'facealpha' , 0.7 );

patch( isosurface( MCDS.mesh.X , MCDS.mesh.Y, MCDS.mesh.Z, ...

MCDS.continuum_variables(1).data, 10000 ), 'edgecolor', ...

'none', 'facecolor', 'g' , 'facealpha' , 0.5 );

hold off

% shading interp

camlight

view(3)

axis image

axis tightcamlight lighting gouraud

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

zlabel( sprintf( 'z (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('%s (%s) at t = %f %s', ...

MCDS.continuum_variables(1).name , ...

MCDS.continuum_variables(1).units , ...

MCDS.metadata.current_time, ...

MCDS.metadata.time_units ) );

You can get more 3-D volumetric visualization ideas at Matlab’s website. This visualization post at MIT also has some great tips.

Plotting the cells

Here is a basic 3-D plot for the cells:

plot3( MCDS.discrete_cells.state.position(:,1) , ...

MCDS.discrete_cells.state.position(:,2) , ...

MCDS.discrete_cells.state.position(:,3) , 'bo' );

view(3)

axis tight

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

zlabel( sprintf( 'z (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('Cells at t = %f %s', MCDS.metadata.current_time, ...

MCDS.metadata.time_units ) );

plot3 is more efficient than scatter3, but scatter3 will give more coloring options. Here is the syntax:

scatter3( MCDS.discrete_cells.state.position(:,1), ...

MCDS.discrete_cells.state.position(:,2), ...

MCDS.discrete_cells.state.position(:,3) , 'bo' );

view(3)

axis tight

xlabel( sprintf( 'x (%s)' , MCDS.metadata.spatial_units) );

ylabel( sprintf( 'y (%s)' , MCDS.metadata.spatial_units) );

zlabel( sprintf( 'z (%s)' , MCDS.metadata.spatial_units) );

title( sprintf('Cells at t = %f %s', MCDS.metadata.current_time, ...

MCDS.metadata.time_units ) );

Jan Poleszczuk gives some great insights on plotting many cells in 3D at his blog. I’d recommend checking out his post on visualizing a cellular automaton model. At some point, I’ll update this post with prettier plotting based on his methods.

What’s next

Future releases of BioFVM will support reading MultiCellDS snapshots (for model initialization).

Matlab is pretty slow at parsing and visualizing large amounts of data. We also plan to include resources for accessing MultiCellDS data in VTK / Paraview and Python.

Return to News • Return to MathCancer • Follow @MathCancer

BioFVM warmup: 2D continuum simulation of tumor growth

Note: This is part of a series of “how-to” blog posts to help new users and developers of BioFVM. See also the guides to setting up a C++ compiler in Windows or OSX.

What you’ll need

- A working C++ development environment with support for OpenMP. See these prior tutorials if you need help.

- A download of BioFVM, available at http://BioFVM.MathCancer.org and http://BioFVM.sf.net. Use Version 1.0.3 or later.

- Matlab or Octave for visualization. Matlab might be available for free at your university. Octave is open source and available from a variety of sources.

Our modeling task

We will implement a basic 2-D model of tumor growth in a heterogeneous microenvironment, with inspiration by glioblastoma models by Kristin Swanson, Russell Rockne and others (e.g., this work), and continuum tumor growth models by Hermann Frieboes, John Lowengrub, and our own lab (e.g., this paper and this paper).

We will model tumor growth driven by a growth substrate, where cells die when the growth substrate is insufficient. The tumor cells will have motility. A continuum blood vasculature will supply the growth substrate, but tumor cells can degrade this existing vasculature. We will revisit and extend this model from time to time in future tutorials.

Mathematical model

Taking inspiration from the groups mentioned above, we’ll model a live cell density ρ of a relatively low-adhesion tumor cell species (e.g., glioblastoma multiforme). We’ll assume that tumor cells move randomly towards regions of low cell density (modeled as diffusion with motility μ). We’ll assume that that the net birth rate rB is proportional to the concentration of growth substrate σ, which is released by blood vasculature with density b. Tumor cells can degrade the tissue and hence this existing vasculature. Tumor cells die at rate rD when the growth substrate level is too low. We assume that the tumor cell density cannot exceed a max level ρmax. A model that includes these effects is:

\[ \frac{ \partial \rho}{\partial t} = \mu \nabla^2 \rho + r_B(\sigma)\rho \left( 1 – \frac{ \rho}{\rho_\textrm{max}} \right) – r_D(\sigma) \rho \]

\[ \frac{ \partial b}{\partial t} = – r_\textrm{degrade} \rho b \]

\[ \frac{\partial \sigma}{ \partial t} = D\nabla^2 \sigma – \lambda_a \sigma – \lambda_2 \rho \sigma + r_\textrm{deliv}b \left( \sigma_\textrm{max} – \sigma \right) \]

where for the birth and death rates, we’ll use the constitutive relations:

\[ r_B(\sigma) = r_B \textrm{ max} \left( \frac{\sigma – \sigma_\textrm{min}}{ \sigma_\textrm{ max} – \sigma_\textrm{min} } , 0 \right)\]

\[r_D(\sigma) = r_D \textrm{ max} \left( \frac{ \sigma_\textrm{min} – \sigma}{\sigma_\textrm{min}} , 0 \right) \]

Mapping the model onto BioFVM

BioFVM solves on a vector u of substrates. We’ll set u = [ρ , b, σ ]. The code expects PDEs of the general form:

\[ \frac{\partial q}{\partial t} = D\nabla^2 q – \lambda q + S\left( q^* – q \right) – Uq\]

So, we determine the decay rate (λ), source function (S), and uptake function (U) for the cell density ρ and the growth substrate σ.

Cell density

We first slightly rewrite the PDE:

\[ \frac{ \partial \rho}{\partial t} = \mu \nabla^2 \rho + r_B(\sigma) \frac{ \rho}{\rho_\textrm{max}} \left( \rho_\textrm{max} – \rho \right) – r_D(\sigma)\rho \]

and then try to match to the general form term-by-term. While BioFVM wasn’t intended for solving nonlinear PDEs of this form, we can make it work by quasi-linearizing, with the following functions:

\[ S = r_B(\sigma) \frac{ \rho }{\rho_\textrm{max}} \hspace{1in} U = r_D(\sigma). \]

When implementing this, we’ll evaluate σ and ρ at the previous time step. The diffusion coefficient is μ, and the decay rate is zero. The target or saturation density is ρmax.

Growth substrate

Similarly, by matching the PDE for σ term-by-term with the general form, we use:

\[ S = r_\textrm{deliv}b, \hspace{1in} U = \lambda_2 \rho. \]

The diffusion coefficient is D, the decay rate is λ1, and the saturation density is σmax.

Blood vessels

Lastly, a term-by-term matching of the blood vessel equation gives the following functions:

\[ S=0 \hspace{1in} U = r_\textrm{degrade}\rho. \]

The diffusion coefficient, decay rate, and saturation density are all zero.

Implementation in BioFVM

- Start a project: Create a new directory for your project (I’d recommend “BioFVM_2D_tumor”), and enter the directory. Place a copy of BioFVM (the zip file) into your directory. Unzip BioFVM, and copy BioFVM*.h, BioFVM*.cpp, and pugixml* files into that directory.

- Copy the matlab visualization files: To help read and plot BioFVM data, we have provided matlab files. Copy all the *.m files from the matlab subdirectory to your project.

- Copy the empty project: BioFVM Version 1.0.3 or later includes a template project and Makefile to make it easier to get started. Copy the Makefile and template_project.cpp file to your project. Rename template_project.cpp to something useful, like 2D_tumor_example.cpp.

- Edit the makefile: Open a terminal window and browse to your project. Tailor the makefile to your new project:

notepad++ Makefile

Change the PROGRAM_NAME to 2Dtumor.

Also, rename main to 2D_tumor_example throughout the Makefile.

Lastly, note that if you are using OSX, you’ll probably need to change from “g++” to your installed compiler. See these tutorials.

- Start adapting 2D_tumor_example.cpp: First, open 2D_tumor_example.cpp:

notepad++ 2D_tumor_example.cpp

Just after the “using namespace BioFVM” section of the code, define useful globals. Here and throughout, new and/or modified code is in blue:

using namespace BioFVM: // helpful -- have indices for each "species" int live_cells = 0; int blood_vessels = 1; int oxygen = 2; // some globals double prolif_rate = 1.0 /24.0; double death_rate = 1.0 / 6; // double cell_motility = 50.0 / 365.25 / 24.0 ; // 50 mm^2 / year --> mm^2 / hour double o2_uptake_rate = 3.673 * 60.0; // 165 micron length scale double vessel_degradation_rate = 1.0 / 2.0 / 24.0 ; // 2 days to disrupt tissue double max_cell_density = 1.0; double o2_supply_rate = 10.0; double o2_normoxic = 1.0; double o2_hypoxic = 0.2;

- Set up the microenvironment: Within main(), make sure we have the right number of substrates, and set them up:

// create a microenvironment, and set units Microenvironment M; M.name = "Tumor microenvironment"; M.time_units = "hr"; M.spatial_units = "mm"; M.mesh.units = M.spatial_units; // set up and add all the densities you plan M.set_density( 0 , "live cells" , "cells" ); M.add_density( "blood vessels" , "vessels/mm^2" ); M.add_density( "oxygen" , "cells" ); // set the properties of the diffusing substrates M.diffusion_coefficients[live_cells] = cell_motility; M.diffusion_coefficients[blood_vessels] = 0; M.diffusion_coefficients[oxygen] = 6.0; // 1e5 microns^2/min in units mm^2 / hr M.decay_rates[live_cells] = 0; M.decay_rates[blood_vessels] = 0; M.decay_rates[oxygen] = 0.01 * o2_uptake_rate; // 1650 micron length scale

Notice how our earlier global definitions of “live_cells”, “blood_vessels”, and “oxygen” makes it easier to make sure we’re referencing the correct substrates in lines like these.

- Resize the domain and test: For this example (and so the code runs very quickly), we’ll work in 2D in a 2 cm × 2 cm domain:

// set the mesh size double dx = 0.05; // 50 microns M.resize_space( 0.0 , 20.0 , 0, 20.0 , -dx/2.0, dx/2.0 , dx, dx, dx );

Notice that we use a tissue thickness of dx/2 to use the 3D code for a 2D simulation. Now, let’s test:

make 2Dtumor

Go ahead and cancel the simulation [Control]+C after a few seconds. You should see something like this:

Starting program ... Microenvironment summary: Tumor microenvironment: Mesh information: type: uniform Cartesian Domain: [0,20] mm x [0,20] mm x [-0.025,0.025] mm resolution: dx = 0.05 mm voxels: 160000 voxel faces: 0 volume: 20 cubic mm Densities: (3 total) live cells: units: cells diffusion coefficient: 0.00570386 mm^2 / hr decay rate: 0 hr^-1 diffusion length scale: 75523.9 mm blood vessels: units: vessels/mm^2 diffusion coefficient: 0 mm^2 / hr decay rate: 0 hr^-1 diffusion length scale: 0 mm oxygen: units: cells diffusion coefficient: 6 mm^2 / hr decay rate: 2.2038 hr^-1 diffusion length scale: 1.65002 mm simulation time: 0 hr (100 hr max) Using method diffusion_decay_solver__constant_coefficients_LOD_3D (implicit 3-D LOD with Thomas Algorithm) ... simulation time: 10 hr (100 hr max) simulation time: 20 hr (100 hr max)

- Set up initial conditions: We’re going to make a small central focus of tumor cells, and a “bumpy” field of blood vessels.

// set initial conditions // use this syntax to create a zero vector of length 3 // std::vector<double> zero(3,0.0); std::vector<double> center(3); center[0] = M.mesh.x_coordinates[M.mesh.x_coordinates.size()-1] /2.0; center[1] = M.mesh.y_coordinates[M.mesh.y_coordinates.size()-1] /2.0; center[2] = 0; double radius = 1.0; std::vector<double> one( M.density_vector(0).size() , 1.0 ); double pi = 2.0 * asin( 1.0 ); // use this syntax for a parallelized loop over all the // voxels in your mesh: #pragma omp parallel for for( int i=0; i < M.number_of_voxels() ; i++ ) { std::vector<double> displacement = M.voxels(i).center – center; double distance = norm( displacement ); if( distance < radius ) { M.density_vector(i)[live_cells] = 0.1; } M.density_vector(i)[blood_vessels]= 0.5 + 0.5*cos(0.4* pi * M.voxels(i).center[0])*cos(0.3*pi *M.voxels(i).center[1]); M.density_vector(i)[oxygen] = o2_normoxic; } - Change to a 2D diffusion solver:

// set up the diffusion solver, sources and sinks M.diffusion_decay_solver = diffusion_decay_solver__constant_coefficients_LOD_2D;

- Set the simulation times: We’ll simulate 10 days, with output every 12 hours.

double t = 0.0; double t_max = 10.0 * 24.0; // 10 days double dt = 0.1; double output_interval = 12.0; // how often you save data double next_output_time = t; // next time you save data

- Set up the source function:

void supply_function( Microenvironment* microenvironment, int voxel_index, std::vector<double>* write_here ) { // use this syntax to access the jth substrate write_here // (*write_here)[j] // use this syntax to access the jth substrate in voxel voxel_index of microenvironment: // microenvironment->density_vector(voxel_index)[j] static double temp1 = prolif_rate / ( o2_normoxic – o2_hypoxic ); (*write_here)[live_cells] = microenvironment->density_vector(voxel_index)[oxygen]; (*write_here)[live_cells] -= o2_hypoxic; if( (*write_here)[live_cells] < 0.0 ) { (*write_here)[live_cells] = 0.0; } else { (*write_here)[live_cells] = temp1; (*write_here)[live_cells] *= microenvironment->density_vector(voxel_index)[live_cells]; } (*write_here)[blood_vessels] = 0.0; (*write_here)[oxygen] = o2_supply_rate; (*write_here)[oxygen] *= microenvironment->density_vector(voxel_index)[blood_vessels]; return; }Notice the use of the static internal variable temp1: the first time this function is called, it declares this helper variable (to save some multiplication operations down the road). The static variable is available to all subsequent calls of this function.

- Set up the target function (substrate saturation densities):

void supply_target_function( Microenvironment* microenvironment, int voxel_index, std::vector<double>* write_here ) { // use this syntax to access the jth substrate write_here // (*write_here)[j] // use this syntax to access the jth substrate in voxel voxel_index of microenvironment: // microenvironment->density_vector(voxel_index)[j] (*write_here)[live_cells] = max_cell_density; (*write_here)[blood_vessels] = 1.0; (*write_here)[oxygen] = o2_normoxic; return; } - Set up the uptake function:

void uptake_function( Microenvironment* microenvironment, int voxel_index, std::vector<double>* write_here ) { // use this syntax to access the jth substrate write_here // (*write_here)[j] // use this syntax to access the jth substrate in voxel voxel_index of microenvironment: // microenvironment->density_vector(voxel_index)[j] (*write_here)[live_cells] = o2_hypoxic; (*write_here)[live_cells] -= microenvironment->density_vector(voxel_index)[oxygen]; if( (*write_here)[live_cells] < 0.0 ) { (*write_here)[live_cells] = 0.0; } else { (*write_here)[live_cells] *= death_rate; } (*write_here)[oxygen] = o2_uptake_rate ; (*write_here)[oxygen] *= microenvironment->density_vector(voxel_index)[live_cells]; (*write_here)[blood_vessels] = vessel_degradation_rate ; (*write_here)[blood_vessels] *= microenvironment->density_vector(voxel_index)[live_cells]; return; }

And that’s it. The source should be ready to go!

Source files

You can download completed source for this example here:

Using the code

Running the code

First, compile and run the code:

make 2Dtumor

The output should look like this.

Starting program … Microenvironment summary: Tumor microenvironment: Mesh information: type: uniform Cartesian Domain: [0,20] mm x [0,20] mm x [-0.025,0.025] mm resolution: dx = 0.05 mm voxels: 160000 voxel faces: 0 volume: 20 cubic mm Densities: (3 total) live cells: units: cells diffusion coefficient: 0.00570386 mm^2 / hr decay rate: 0 hr^-1 diffusion length scale: 75523.9 mm blood vessels: units: vessels/mm^2 diffusion coefficient: 0 mm^2 / hr decay rate: 0 hr^-1 diffusion length scale: 0 mm oxygen: units: cells diffusion coefficient: 6 mm^2 / hr decay rate: 2.2038 hr^-1 diffusion length scale: 1.65002 mm simulation time: 0 hr (240 hr max) Using method diffusion_decay_solver__constant_coefficients_LOD_2D (2D LOD with Thomas Algorithm) … simulation time: 12 hr (240 hr max) simulation time: 24 hr (240 hr max) simulation time: 36 hr (240 hr max) simulation time: 48 hr (240 hr max) simulation time: 60 hr (240 hr max) simulation time: 72 hr (240 hr max) simulation time: 84 hr (240 hr max) simulation time: 96 hr (240 hr max) simulation time: 108 hr (240 hr max) simulation time: 120 hr (240 hr max) simulation time: 132 hr (240 hr max) simulation time: 144 hr (240 hr max) simulation time: 156 hr (240 hr max) simulation time: 168 hr (240 hr max) simulation time: 180 hr (240 hr max) simulation time: 192 hr (240 hr max) simulation time: 204 hr (240 hr max) simulation time: 216 hr (240 hr max) simulation time: 228 hr (240 hr max) simulation time: 240 hr (240 hr max) Done!

Looking at the data

Now, let’s pop it open in matlab (or octave):

matlab

To load and plot a single time (e.g., the last tim)

!ls *.mat M = read_microenvironment( 'output_240.000000.mat' ); plot_microenvironment( M );

To add some labels:

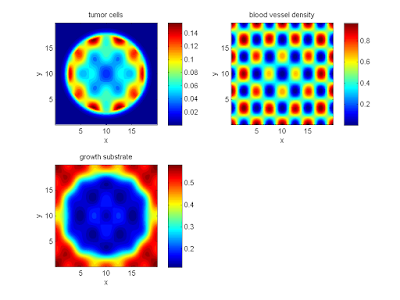

labels{1} = 'tumor cells';

labels{2} = 'blood vessel density';

labels{3} = 'growth substrate';

plot_microenvironment( M ,labels );

Your output should look a bit like this:

Lastly, you might want to script the code to create and save plots of all the times.

labels{1} = 'tumor cells';

labels{2} = 'blood vessel density';

labels{3} = 'growth substrate';

for i=0:20

t = i*12;

input_file = sprintf( 'output_%3.6f.mat', t );

output_file = sprintf( 'output_%3.6f.png', t );

M = read_microenvironment( input_file );

plot_microenvironment( M , labels );

print( gcf , '-dpng' , output_file );

end

What’s next

We’ll continue posting new tutorials on adapting BioFVM to existing and new simulators, as well as guides to new features as we roll them out.

Stay tuned and watch this blog!

Setting up gcc / OpenMP on OSX (Homebrew edition)

Note: This is part of a series of “how-to” blog posts to help new users and developers of BioFVM and PhysiCell. This guide is for OSX users. Windows users should use this guide instead. A Linux guide is expected soon.

These instructions should get you up and running with a minimal environment for compiling 64-bit C++ projects with OpenMP (e.g., BioFVM and PhysiCell) using gcc. These instructions were tested with OSX 10.11 (El Capitan) and 10.12 (Sierra), but they should work on any reasonably recent version of OSX.

In the end result, you’ll have a compiler and key makefile capabilities. The entire toolchain is free and open source.

Of course, you can use other compilers and more sophisticated integrated desktop environments, but these instructions will get you a good baseline system with support for 64-bit binaries and OpenMP parallelization.

Note 1: OSX / Xcode appears to have gcc out of the box (you can type “gcc” in a Terminal window), but this really just maps back onto Apple’s build of clang. Alas, this will not support OpenMP for parallelization.

Note 2: In this post, we showed how to set up gcc using the popular MacPorts package manager. Because MacPorts builds gcc (and all its dependencies!) from source, it takes a very, very long time. On my 2012 Macbook Air, this step took 16 hours. This tutorial uses Homebrew to dramatically speed up the process!

Note 3: This is an update over the previous version. It incorporates new information that Xcode command line tools can be installed without the full 4.41 GB download / installation of Xcode. Many thanks to Walter de Back and Tim at the Homebrew project for their help!

What you’ll need:

- XCode Command Line Tools: These command line tools are needed for Homebrew and related package managers. Installation instructions are now very simple and included below. As of January 18, 2016, this will install Version 2343.

- Homebrew: This is a package manager for OSX, which will let you easily download and install many linux utilities without building them from source. You’ll particularly need it for getting gcc. Installation is a simple command-line script, as detailed below. As of August 2, 2017, this will download Version 1.3.0.

- gcc (from Homebrew): This will be an up-to-date 64-bit version of gcc, with support for OpenMP. As of August 2, 2017, this will download Version 7.1.0.

Main steps:

1) Install the XCode Command Line Tools

Open a terminal window (Open Launchpad, then “Other”, then “Terminal”), and run:

user$ xcode-select --install

A window should pop up asking you to either get Xcode or install. Choose the “install” option to avoid the huge 4+ GB Xcode download. It should only take a few minutes to complete.

2) Install Homebrew

Open a terminal window (Open Launchpad, then “Other”, then “Terminal”), and run:

user$ ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

Let the script run, and answer “y” whenever asked. This will not take very long.

3) Get, install, and prepare gcc

Open a terminal window (see above), and search for gcc, version 7.x or above

user$ brew search gcc

You should see a list of packages, including gcc7. (In 2015, this looked like “gcc5”. In 2017, this looks like “gcc@7”.)

Then, download and install gcc:

user$ brew install gcc

This will download whatever dependencies are needed, generally already pre-compiled. The whole process should only take five or ten minutes.

Lastly, you need to get the exact name of your compiler. In your terminal window, type g++, and then hit tab twice to see a list. On my system, I see this:

Pauls-MBA:~ pmacklin$ g++ g++ g++-7 g++-mp-7

Look for the version of g++ without an “mp” (from MacPorts) in its name. In my case, it’s g++-7. Double-check that you have the right one by checking its version. It should look something like this:

Pauls-MBA:~ pmacklin$ g++-7 --version g++-7 (Homebrew GCC 7.1.0) 7.1.0 Copyright (C) 2017 Free Software Foundation, Inc. This is free software; see the source for copying conditions. There is NO warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

Notice that Homebrew shows up in the information. The correct compiler is g++-7.

PhysiCell Version 1.2.2 and greater use a system variable to record your compiler version, so that you don’t need to modify the CC line in PhysiCell Makefiles. Set the PHYSICELL_CPP variable to record the compiler you just found above. For example, on the bash shell:

export PHYSICELL_CPP=g++-7 echo export PHYSICELL_CPP=g++-7 >> ~/.bash_profile

One last thing: If you don’t update your paths, make will may fail as it continues to combine Apple’s “gcc” toolchain with real gcc. (This seems to happen most often if you installed an older gcc like gcc5 with MacPorts earlier.) You may see errors like this:

user$ make g++-7 -march=core2 -O3 -fomit-frame-pointer -fopenmp -std=c++11 -c BioFVM_vector.cpp FATAL:/opt/local/bin/../libexec/as/x86_64/as: I don't understand 'm' flag! make: *** [BIOFVM_vector.o] Error 1

To avoid this, run:

echo export PATH=/usr/local/bin:$PATH >> ~/.bash_profile

Note that you’ll need to open a new Terminal window for this fix to apply.

4) Test your setup

I wrote a sample C++ program that tests OpenMP parallelization (32 threads). If you can compile and run it, it means that everything (including make) is working! :-)

Make a new directory, and enter it

Open Terminal (see above). You should be in your user profile’s root directory. Make a new subdirectory called GCC_test, and enter it.

mkdir GCC_test cd GCC_test

Grab a sample parallelized program:

Download a Makefile and C++ source file, and save them to the GCC_test directory. Here are the links:

- Makefile: [click here]

- C++ source: [click here]

Note: The Makefiles in PhysiCell (versions > 1.2.1) can use an environment variable to specify an OpenMP-capable g++ compiler. If you have not yet done so, you should go ahead and set that now, e.g., for the bash shell:

export PHYSICELL_CPP=g++-7 echo export PHYSICELL_CPP=g++-7 >> ~/.bash_profile

Compile and run the test:

Go back to your (still open) command prompt. Compile and run the program:

make ./my_test

The output should look something like this:

Allocating 4096 MB of memory ... Done! Entering main loop ... Done!

Note 1: If the make command gives errors like “**** missing separator”, then you need to replace the white space (e.g., one or more spaces) at the start of the “$(COMPILE_COMMAND)” and “rm -f” lines with a single tab character.

Note 2: If the compiler gives an error like “fatal error: ‘omp.h’ not found”, you probably used Apple’s build of clang, which does not include OpenMP support. You’ll need to make sure that you set the environment variable PHYSICELL_CPP as above (for PhysiCell 1.2.2 or later), or specify your compiler on the CC line of your makefile (for PhysiCell 1.2.1 or earlier).

Now, let’s verify that the code is using OpenMP.

Open another Terminal window. While the code is running, run top. Take a look at the performance, particularly CPU usage. While your program is running, you should see CPU usage fairly close to ‘100% user’. (This is a good indication that your code is running the OpenMP parallelization as expected.)

What’s next?

Download a copy of PhysiCell and try out the included examples! Visit BioFVM at MathCancer.org.

- PhysiCell links:

- PhysiCell Method Paper at bioRxiv: https://doi.org/10.1101/088773

- PhysiCell on MathCancer: http://PhysiCell.MathCancer.org

- PhysiCell on SourceForge: http://PhysiCell.sf.net

- PhysiCell on github: http://github.com/MathCancer/PhysiCell

- PhysiCell tutorials: [click here]

- BioFVM links:

- BioFVM announcement on this blog: [click here]

- BioFVM on MathCancer.org: http://BioFVM.MathCancer.org

- BioFVM on SourceForge: http://BioFVM.sf.net

- BioFVM Method Paper in BioInformatics: http://dx.doi.org/10.1093/bioinformatics/btv730

- BioFVM tutorials: [click here]

Setting up gcc / OpenMP on OSX (Homebrew edition) (outdated)

Note 1: This is the part of a series of “how-to” blog posts to help new users and developers of BioFVM and PhysiCell. This guide is for OSX users. Windows users should use this guide instead. A Linux guide is expected soon.

Note 2: This tutorial is outdated. Please see this updated version.

These instructions should get you up and running with a minimal environment for compiling 64-bit C++ projects with OpenMP (e.g., BioFVM and PhysiCell) using gcc. These instructions were tested with OSX 10.11 (El Capitan), but they should work on any reasonably recent version of OSX.

In the end result, you’ll have a compiler and key makefile capabilities. The entire toolchain is free and open source.

Of course, you can use other compilers and more sophisticated integrated desktop environments, but these instructions will get you a good baseline system with support for 64-bit binaries and OpenMP parallelization.

Note 3: OSX / Xcode appears to have gcc out of the box (you can type “gcc” in a Terminal window), but this really just maps back onto Apple’s build of clang. Alas, this will not support OpenMP for parallelization.

Note 4: Yesterday in this post, we showed how to set up gcc using the popular MacPorts package manager. Because MacPorts builds gcc (and all its dependencies!) from source, it takes a very, very long time. On my 2012 Macbook Air, this step took 16 hours. This tutorial uses Homebrew to dramatically speed up the process!

What you’ll need:

- XCode: This includes command line development tools. Evidently, it is required for both Homebrew and its competitors (e.g., MacPorts). Download the latest version in the App Store. (Search for xcode.) As of January 15, 2016, the App Store will install Version 7.2. Please note that this is a 4.41 GB download!

- Homebrew: This is a package manager for OSX, which will let you easily download and install many linux utilities without building them from source. You’ll particularly need it for getting gcc. Installation is a simple command-line script, as detailed below. As of January 17, 2016, this will download Version 0.9.5.

- gcc5 (from Homebrew): This will be an up-to-date 64-bit version of gcc, with support for OpenMP. As of January 17, 2016, this will download Version 5.2.0.

Main steps:

1) Download, install, and prepare XCode

As mentioned above, open the App Store, search for Xcode, and start the download / install. Go ahead and grab a coffee while it’s downloading and installing 4+ GB. Once it has installed, open Xcode, agree to the license, and let it install whatever components it needs.

Now, you need to get the command line tools. Go to the Xcode menu, select “Open Developer Tool”, and choose “More Developer Tools …”. This will open up a site in Safari and prompt you to log in.

Sign on with your AppleID, agree to yet more licensing terms, and then search for “command line tools” for your version of Xcode and OSX. (In my case, this is OSX 10.11 with Xcode 7.2) Click the + next to the correct version, and then the link for the dmg file. (Command_Line_Tools_OS_X_10.11_for_Xcode_7.2.dmg).

Double-click the dmg file. Double-click pkg file it contains. Click “continue”, “OK”, and “agree” as much as it takes to install. Once done, go ahead and exit the installer and close the dmg file.

2) Install Homebrew

Open a terminal window (Open Launchpad, then “Other”, then “Terminal”), and run:

> ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"

Let the script run, and answer “y” whenever asked. This will not take very long.

3) Get, install, and prepare gcc

Open a terminal window (see above), and search for gcc, version 5.x or above

> brew search gcc5

You should see a list of packages, including gcc5. Take a note of what is found. (In my case, it found homebrew/versions/gcc5.)

Then, download and install gcc5:

> brew install homebrew/versions/gcc5

This will download whatever dependencies are needed, generally already pre-compiled. The whole process should only take five or ten minutes.

Lastly, you need to get the exact name of your compiler. In your terminal window, type g++, and then hit tab twice to see a list. On my system, I see this:

Pauls-MBA:~ pmacklin$ g++ g++ g++-5 g++-mp-5

Look for the version of g++ without an “mp” (for MacPorts) in its name. In my case, it’s g++-5. Double-check that you have the right one by checking its version. It should look something like this:

Pauls-MBA:~ pmacklin$ g++-5 --version g++-5 (Homebrew gcc5 5.2.0) 5.2.0 Copyright (C) 2015 Free Software Foundation, Inc. This is free software; see the source for copying conditions. There is NO warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

Notice that Homebrew shows up in the information. The correct compiler is g++-5.

5) Test the compiler

Write a basic parallelized program:

Open Terminal (see above). You should be in your user profile’s root directory. Make a new subdirectory, enter it, and create a new file:

> mkdir omp_test > cd omp_test > nano omp_test.cpp

Then, write your basic OpenMP test:

#include <iostream>

#include <cmath>

#include <vector>

#include <omp.h>

int main( int argc, char* argv[] )

{

omp_set_num_threads( 8 );

double pi = acos( -1.0 );

std::cout << "Allocating memory ..." << std::endl;

std::vector<double> my_vector( 128000000, 0.0 );

std::cout << "Done!" << std::endl << std::endl;

std::cout << "Entering main loop ... " << std::endl;

#pragma omp parallel for

for( int i=0; i < my_vector.size(); i++ )

{

my_vector[i] = exp( -sin( i*i + pi*log(i+1) ) );

}

std::cout << "Done!" << std::endl;

return 0;

}

Save the file (as omp_test.cpp). (In nano, use [Control]+X, Y, and then confirm the choice of filename.)

In the omp_set_num_threads() line above, replace 8 with the maximum number of virtual processors on your CPU. (For a quad-core CPU with hyperthreading, this number is 8. On a hex-core CPU without hyperthreading, this number is 6.) If in doubt, leave it alone for now.

Write a makefile:

Next, create a Makefile to start editing:

> nano Makefile

Add the following contents:

CC := g++-5 # replace this with your correct compiler as identified above ARCH := core2 # Replace this with your CPU architecture. # core2 is pretty safe for most modern machines. CFLAGS := -march=$(ARCH) -O3 -fopenmp -m64 -std=c++11 COMPILE_COMMAND := $(CC) $(CFLAGS) OUTPUT := my_test all: omp_test.cpp $(COMPILE_COMMAND) -o $(OUTPUT) omp_test.cpp clean: rm -f *.o $(OUTPUT).*

Go ahead and save this (as Makefile). ([Control]-X, Y, confirm the filename.)

Compile and run the test:

Go back to your (still open) command prompt. Compile and run the program:

> make > ./my_test

The output should look something like this:

Allocating memory ... Done! Entering main loop ... Done!

Note 1: If the make command gives errors like “**** missing separator”, then you need to replace the white space (e.g., one or more spaces) at the start of the “$(COMPILE_COMMAND)” and “rm -f” lines with a single tab character.

Note 2: If the compiler gives an error like “fatal error: ‘omp.h’ not found”, you probably used Apple’s build of clang, which does not include OpenMP support. You’ll need to make sure that you specify your compiler on the CC line of your makefile.

Now, let’s verify that the code is using OpenMP.

Open another Terminal window. While the code is running, run top. Take a look at the performance, particularly CPU usage. While your program is running, you should see CPU usage fairly close to ‘100% user’. (This is a good indication that your code is running the OpenMP parallelization as expected.)

What’s next?

Download a copy of BioFVM and try out the included examples! Visit BioFVM at MathCancer.org.

- BioFVM announcement on this blog: [click here]

- BioFVM on MathCancer.org: http://BioFVM.MathCancer.org

- BioFVM on SourceForge: http://BioFVM.sf.net

- BioFVM Method Paper in BioInformatics: http://dx.doi.org/10.1093/bioinformatics/btv730

- BioFVM tutorials: [click here]

Setting up gcc / OpenMP on OSX (MacPorts edition)

Note: This is the part of a series of “how-to” blog posts to help new users and developers of BioFVM and PhysiCell. This guide is for OSX users. Windows users should use this guide instead. A Linux guide is expected soon.

These instructions should get you up and running with a minimal environment for compiling 64-bit C++ projects with OpenMP (e.g., BioFVM and PhysiCell) using gcc. These instructions were tested with OSX 10.11 (El Capitan), but they should work on any reasonably recent version of OSX.

In the end result, you’ll have a compiler and key makefile capabilities. The entire toolchain is free and open source.

Of course, you can use other compilers and more sophisticated integrated desktop environments, but these instructions will get you a good baseline system with support for 64-bit binaries and OpenMP parallelization.

Note 1: OSX / Xcode appears to have gcc out of the box (you can type “gcc” in a Terminal window), but this really just maps back onto Apple’s build of clang. Alas, this will not support OpenMP for parallelization.

Note 2: This process is somewhat painful because MacPorts compiles everything from source, rather than using pre-compiled binaries. This tutorial uses Homebrew: a newer package manager that uses pre-compiled binaries to dramatically speed up the process. I highly recommend using the Homebrew version of this tutorial.

What you’ll need:

- XCode: This includes command line development tools. Evidently, it is required for both Macports and its competitors (e.g., Homebrew). Download the latest version in the App Store. (Search for xcode.) As of January 15, 2016, the App Store will install Version 7.2. Please note that this is a 4.41 GB download!

- MacPorts: This is a package manager for OSX, which will let you easily download, build and install many linux utilities. You’ll particularly need it for getting gcc. Download the latest installer (MacPorts-2.3.4-10.11-ElCapitan.pkg) here. As of August 2, 2017, this will download Version 2.4.1.

- gcc7 (from MacPorts): This will be an up-to-date 64-bit version of gcc, with support for OpenMP. As of August 2, 2017, this will download Version 7.1.1.

Main steps:

1) Download, install, and prepare XCode

As mentioned above, open the App Store, search for Xcode, and start the download / install. Go ahead and grab a coffee while it’s downloading and installing 4+ GB. Once it has installed, open Xcode, agree to the license, and let it install whatever components it needs.

Now, you need to get the command line tools. Go to the Xcode menu, select “Open Developer Tool”, and choose “More Developer Tools …”. This will open up a site in Safari and prompt you to log in.

Sign on with your AppleID, agree to yet more licensing terms, and then search for “command line tools” for your version of Xcode and OSX. (In my case, this is OSX 10.11 with Xcode 7.2) Click the + next to the correct version, and then the link for the dmg file. (Command_Line_Tools_OS_X_10.11_for_Xcode_7.2.dmg).

Double-click the dmg file. Double-click pkg file it contains. Click “continue”, “OK”, and “agree” as much as it takes to install. Once done, go ahead and exit the installer and close the dmg file.

2) Install Macports

Double-click the MacPorts pkg file you downloaded above. OSX may complain with a message like this:

“MacPorts-2.4.1-10.11-ElCapitan.pkg” can’t be opened because it is from an unidentified developer.

If so, follow the directions here.

Leave all the default choices as they are in the installer. Click OK a bunch of times. The package scripts might take awhile.

Open a terminal window (Open Launchpad, then “Other”, then “Terminal”), and run:

sudo port -v selfupdate

to make sure that everything is up-to-date.

3) Get, install, and prepare gcc

Open a terminal window (see above), and search for gcc, version 7.x or above

port search gcc7

You should see a list of packages, including gcc7.

Then, download, build and install gcc7:

sudo port install gcc7

You should see a list of packages, including gcc7.

This will download, build, and install any dependencies necessary for gcc7, including llvm and many, many other things. This takes even longer than the 4.4 GB download of Xcode. Go get dinner and a coffee. You may well need to let this run overnight. (On my 2012 Macbook Air, it required 16 hours to fully build gcc7 and its dependencies in a prior tutorial. We’ll discuss this point further below.)

Lastly, you need to get the exact name of your compiler. In your terminal window, type g++, and then hit tab twice to see a list. On my system, I see this:

Pauls-MBA:~ pmacklin$ g++ g++ g++-mp-7

Look for the version of g++ with an “mp” in its name. In my case, it’s g++-mp-7. Double-check that you have the right one by checking its version. It should look something like this:

Pauls-MBA:~ pmacklin$ g++-mp-7 --version g++-mp-7 (MacPorts gcc7 7-20170622_0) 7.1.1 20170622 Copyright (C) 2017 Free Software Foundation, Inc. This is free software; see the source for copying conditions. There is NO warranty; not even for MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

Notice that MacPorts shows up in the information. The correct compiler is g++-mp-7.

PhysiCell Version 1.2.2 and greater use a system variable to record your compiler version, so that you don’t need to modify the CC line in PhysiCell Makefiles. Set the PHYSICELL_CPP variable to record the compiler you just found above. For example, on the bash shell:

export PHYSICELL_CPP=g++-mp-7 echo export PHYSICELL_CPP=g++-mp-7 >> ~/.bash_profile

4) Test your setup

I wrote a sample C++ program that tests OpenMP parallelization (32 threads). If you can compile and run it, it means that everything (including make) is working! :-)

Make a new directory, and enter it

Open Terminal (see above). You should be in your user profile’s root directory. Make a new subdirectory called GCC_test, and enter it.

mkdir GCC_test cd GCC_test

Grab a sample parallelized program:

Download a Makefile and C++ source file, and save them to the GCC_test directory. Here are the links:

- Makefile: [click here]

- C++ source: [click here]

Note: The Makefiles in PhysiCell (versions > 1.2.1) can use an environment variable to specify an OpenMP-capable g++ compiler. If you have not yet done so, you should go ahead and set that now, e.g., for the bash shell:

export PHYSICELL_CPP=g++-mp-7 echo export PHYSICELL_CPP=g++-mp-7 >> ~/.bash_profile

Compile and run the test:

Go back to your (still open) command prompt. Compile and run the program:

make ./my_test

The output should look something like this:

Allocating 4096 MB of memory ... Done! Entering main loop ... Done!

Note 1: If the make command gives errors like “**** missing separator”, then you need to replace the white space (e.g., one or more spaces) at the start of the “$(COMPILE_COMMAND)” and “rm -f” lines with a single tab character.

Note 2: If the compiler gives an error like “fatal error: ‘omp.h’ not found”, you probably used Apple’s build of clang, which does not include OpenMP support. You’ll need to make sure that you set the environment variable PHYSICELL_CPP as above (for PhysiCell 1.2.2 or later), or specify your compiler on the CC line of your makefile (for PhysiCell 1.2.1 or earlier).

Now, let’s verify that the code is using OpenMP.

Open another Terminal window. While the code is running, run top. Take a look at the performance, particularly CPU usage. While your program is running, you should see CPU usage fairly close to ‘100% user’. (This is a good indication that your code is running the OpenMP parallelization as expected.)

MacPorts and Pain

MacPorts builds all the tools from source. While this ensures that you get very up-to-date binaries, it is very, very slow!

However, all hope is not lost. It turns out that Homebrew will install pre-compiled binaries, so the 16-hour process of installing gcc is reduced to about 5-10 minutes. Check back tomorrow for a follow-up tutorial on how to use Homebrew to set up gcc.

What’s next?

Download a copy of PhysiCell and try out the included examples! Visit BioFVM at MathCancer.org.

- PhysiCell links:

- PhysiCell Method Paper at bioRxiv: https://doi.org/10.1101/088773

- PhysiCell on MathCancer: http://PhysiCell.MathCancer.org

- PhysiCell on SourceForge: http://PhysiCell.sf.net

- PhysiCell on github: http://github.com/MathCancer/PhysiCell

- PhysiCell tutorials: [click here]

- BioFVM links:

- BioFVM announcement on this blog: [click here]

- BioFVM on MathCancer.org: http://BioFVM.MathCancer.org

- BioFVM on SourceForge: http://BioFVM.sf.net

- BioFVM Method Paper in BioInformatics: http://dx.doi.org/10.1093/bioinformatics/btv730

- BioFVM tutorials: [click here]

Setting up a 64-bit gcc/OpenMP environment on Windows

Note: This is the part of a series of “how-to” blog posts to help new users and developers of BioFVM and PhysiCell. This guide is for Windows users. OSX users should use this guide for Homebrew (preferred method) or this guide for MacPorts (much slower but reliable). A Linux guide is expected soon.

These instructions should get you up and running with a minimal environment for compiling 64-bit C++ projects with OpenMP (e.g., BioFVM and PhysiCell) using a 64-bit Windows port of gcc. These instructions should work for any modern Windows installation, say Windows 7 or above. This tutorial assumes you have a 64-bit CPU running on a 64-bit operating system.

In the end result, you’ll have a compiler, key makefile capabilities, and a decent text editor. The entire toolchain is free and open source.

Of course, you can use other compilers and more sophisticated integrated desktop environments, but these instructions will get you a good baseline system with support for 64-bit binaries and OpenMP parallelization.

What you’ll need:

- MinGW-w64 compiler: This is a native port of the venerable gcc compiler for windows, with support for 64-bit executables. Download the latest installer (mingw-w64-install.exe) here. As of February 3, 2020, this installer will download gcc 8.1.0.

- MSYS tools: This gets you some of the common command-line utilities from Linux, Unix, and BSD systems (make, touch, etc.). Download the latest installer (mingw-get-setup.exe) here.

- Notepad++ text editor: This is a full-featured text editor for Windows, including syntax highlighting, easy commenting, tabbed editing, etc. Download the latest version here. As of February 3, 2020, this will download Version 7.8.4.

Main steps:

1) Install the compiler

Run the mingw-w64-install.exe. When asked, select:

Version: 8.1.0 (or later)

Architecture: x86_64

Threads: posix (switched to posix to support PhysiBoSS)

Exception: seh (While sjlj works and should be more compatible with various GNU tools, the native SEH should be faster.)

Build version: 0 (or the default)

Leave the destination folder wherever the installer wants to put it. In my case, it is:

C:\Program Files\mingw-w64\x86_64-8.1.0-posix-seh-rt_v6-rev0

Let MinGW-w64 download and install whatever it needs.

2) Install the MSYS tools

Run mingw-get-setup.exe. Leave the default installation directory and any other defaults on the initial screen. Click “continue” and let it download what it needs to get started. (a bunch of XML files, for the most part.) Click “Continue” when it’s done.

This will open up a new package manager. Our goal here is just to grab MSYS, rather than the full (but merely 32-bit) compiler. Scroll through and select (“mark for installation”) the following:

- mingw-developer-toolkit. (Note: This should automatically select msys-base.)

Next, click “Apply Changes” in the “Installation” menu. When prompted, click “Apply.” Let the package manager download and install what it needs (on the order of 95 packages). Go ahead and close things once the installation is done, including the package manager.

3) Install the text editor

Run the Notepad++ installer. You can stick with the defaults.

4) Add these tools to your system path

Adding the compiler, text editor, and MSYS tools to your path helps you to run make files from the compiler. First, get the path of your compiler:

- Open Windows Explorer ( [Windows]+E )

- Browse through C:\, then Program Files, mingw-w64, then a messy path name corresponding to our installation choices (in my case, x86_64-8.1.0-posix-seh_rt_v6-rev0), then mingw64, and finally bin.

- Record your answer. For me, it’s

C:\Program Files\mingw-w64\x86_64-8.1.0-posix-seh-rt_v6-rev0\mingw64\bin\

Then, get the path to Notepad++.

- Go back to Explorer, and choose “This PC” or “My Computer” from the left column.

- Browse through C:\, then Program Files (x86), then Notepad++.

- Copy the path from the Explorer address bar.

- Record your answer. For me, it’s

c:\Program Files (x86)\Notepad++\

Then, get the path for MSYS:

- Go back to Explorer, and choose “This PC” or “My Computer” from the left column.

- Browse through C:\, then MinGW, then msys, then 1.0, and finally bin.

- Copy the path from the Explorer address bar.

- Record your answer. For me, it’s

C:\MinGW\msys\1.0\bin\

Lastly, add these paths to the system path, as in this tutorial. (Note that later versions of the mingw-w64 installer might already automatically update your path.)

5) Test your setup

I wrote a sample C++ program that tests OpenMP parallelization (32 threads). If you can compile and run it, it means that everything (including make) is working! :-)

Make a new directory, and enter it

Enter a command prompt ( [windows]+R, then cmd ). You should be in your user profile’s root directory. Make a new subdirectory, called GCC_test, and enter it.

mkdir GCC_test cd GCC_test

Grab a sample parallelized program:

Download a Makefile and C++ source file, and save them to the GCC_test directory. Here are the links:

- Makefile: [click here]

- C++ source: [click here]

Compile and run the test:

Go back to your (still open) command prompt. Compile and run the program:

make my_test

The output should look something like this:

Allocating 4096 MB of memory ... Done! Entering main loop ... Done!

Open up the Windows task manager ([windows]+R, taskmgr) while the code is running. Take a look at the performance tab, particularly the graphs of the CPU usage history. While your program is running, you should see all your virtual processes 100% utilized, unless you have more than 32 virtual CPUs. (This is a good indication that your code is running the OpenMP parallelization as expected.)

Note: If the make command gives errors like “**** missing separator”, then you need to replace the white space (e.g., one or more spaces) at the start of the “$(COMPILE_COMMAND)” and “rm -f” lines with a single tab character.

What’s next?

Download a copy of PhysiCell and try out the included examples! Visit BioFVM at MathCancer.org.

- PhysiCell links:

- PhysiCell Method Paper at bioRxiv: https://doi.org/10.1101/088773

- PhysiCell on MathCancer: http://PhysiCell.MathCancer.org

- PhysiCell on SourceForge: http://PhysiCell.sf.net

- PhysiCell on github: http://github.com/MathCancer/PhysiCell

- PhysiCell tutorials: [click here]

- BioFVM links:

- BioFVM announcement on this blog: [click here]

- BioFVM on MathCancer.org: http://BioFVM.MathCancer.org

- BioFVM on SourceForge: http://BioFVM.sf.net

- BioFVM Method Paper in BioInformatics: http://dx.doi.org/10.1093/bioinformatics/btv730

- BioFVM tutorials: [click here]

BioFVM: an efficient, parallelized diffusive transport solver for 3-D biological simulations

I’m very excited to announce that our 3-D diffusion solver has been accepted for publication and is now online at Bioinformatics. Click here to check out the open access preprint!

A. Ghaffarizadeh, S.H. Friedman, and P. Macklin. BioFVM: an efficient, parallelized diffusive transport solver for 3-D biological simulations. Bioinformatics, 2015.

DOI: 10.1093/bioinformatics/btv730 (free; open access)

BioFVM (stands for “Finite Volume Method for biological problems) is an open source package to solve for 3-D diffusion of several substrates with desktop workstations, single supercomputer nodes, or even laptops (for smaller problems). We built it from the ground up for biological problems, with optimizations in C++ and OpenMP to take advantage of all those cores on your CPU. The code is available at SourceForge and BioFVM.MathCancer.org.

The main idea here is to make it easier to simulate big, cool problems in 3-D multicellular biology. We’ll take care of secretion, diffusion, and uptake of things like oxygen, glucose, metabolic waste products, signaling factors, and drugs, so you can focus on the rest of your model.

Design philosophy and main capabilities

Solving diffusion equations efficiently and accurately is hard, especially in 3D. Almost all biological simulations deal with this, many by using explicit finite differences (easy to code and accurate, but very slow!) or implicit methods like ADI (accurate and relatively fast, but difficult to code with complex linking to libraries). While real biological systems often depend upon many diffusing things (lots of signaling factors for cell-cell communication, growth substrates, drugs, etc.), most solvers only scale well to simulating two or three. We solve a system of PDEs of the following form:

\[ \frac{\partial \vec{\rho}}{\partial t} = \overbrace{ \vec{D} \nabla^2 \vec{\rho} }^\textrm{diffusion}

– \overbrace{ \vec{\lambda} \vec{\rho} }^\textrm{decay} + \overbrace{ \vec{S} \left( \vec{\rho}^* – \vec{\rho} \right) }^{\textrm{bulk source}} – \overbrace{ \vec{U} \vec{\rho} }^{\textrm{bulk uptake}} + \overbrace{\sum_{\textrm{cells } k} 1_k(\vec{x}) \left[ \vec{S}_k \left( \vec{\rho}^*_k – \vec{\rho} \right) – \vec{U}_k \vec{\rho} \right] }^\textrm{sources and sinks by cells} \]

Above, all vector-vector products are term-by-term.

Solving for many diffusing substrates

We set out to write a package that could simulate many diffusing substrates using algorithms that were fast but simple enough to optimize. To do this, we wrote the entire solver to work on vectors of substrates, rather than on individual PDEs. In performance testing, we found that simulating 10 diffusing things only takes about 2.6 times longer than simulating one. (In traditional codes, simulating ten things takes ten times as long as simulating one.) We tried our hardest to break the code in our testing, but we failed. We simulated all the way from 1 diffusing substrate up to 128 without any problems. Adding new substrates increases the computational cost linearly.

Combining simple but tailored solvers

We used an approach called operator splitting: breaking a complicated PDE into a series of simpler PDEs and ODEs, which can be solved one at a time with implicit methods. This allowed us to write a very fast diffusion/decay solver, a bulk supply/uptake solver, and a cell-based secretion/uptake solver. Each of these individual solvers was individually optimized. Theory tells us that if each individual solver is first-order accurate in time and stable, then the overall approach is first-order accurate in time and stable.

The beauty of the approach is that each solver can individually be improved over time. For example, in BioFVM 1.0.2, we doubled the performance of the cell-based secretion/uptake solver. The operator splitting approach also lets us add new terms to the “main” PDE by writing new solvers, rather than rewriting a large, monolithic solver. We will take advantage of this to add advective terms (critical for interstitial flow) in future releases.

Optimizing the diffusion solver for large 3-D domains

For the first main release of BioFVM, we restricted ourselves to Cartesian meshes, which allowed us to write very tailored mesh data structures and diffusion solvers. (Note: the finite volume method reduces to finite differences on Cartesian meshes with trivial Neumann boundary conditions.) We intend to work on more general Voronoi meshes in a future release. (This will be particularly helpful for sources/sinks along blood vessels.)

By using constant diffusion and decay coefficients, we were able to write very fast solvers for Cartesian meshes. We use the locally one-dimensional (LOD) method–a specialized form of operator splitting–to break the 3-D diffusion problem into a series of 1-D diffusion problems. For each (y,z) in our mesh, we have a 1-D diffusion problem along x. This yields a tridiagonal linear system which we can solve efficiently with the Thomas algorithm. Moreover, because the forward-sweep steps only depend upon the coefficient matrix (which is unchanging over time), we can pre-compute and store the results in memory for all the x-diffusion problems. In fact, the structure of the matrix allows us to pre-compute part of the back-substitution steps as well. Same for y- and z-diffusion. This gives a big speedup.

Next, we can use all those CPU cores to speed up our work. While the back-substitution steps of the Thomas algorithm can’t be easily parallelized (it’s a serial operation), we can solve many x-diffusion problems at the same time, using independent copies (instances) of the Thomas solver. So, we break up all the x-diffusion problems up across a big OpenMP loop, and repeat for y– and z-diffusion.

Lastly, we used overloaded +=, axpy and similar operations on the vector of substrates, to avoid unnecessary (and very expensive) memory allocation and copy operations wherever we could. This was a really fun code to write!

The work seems to have payed off: we have found that solving on 1 million voxel meshes (about 8 mm3 at 20 μm resolution) is easy even for laptops.

Simulating many cells

We tailored the solver to allow both lattice- and off-lattice cell sources and sinks. Desktop workstations should have no trouble with 1,000,000 cells secreting and uptaking a few substrates.

Simplifying the non-science

We worked to minimize external dependencies, because few things are more frustrating than tracking down a bunch of libraries that may not work together on your platform. The first release BioFVM only has one external dependency: pugixml (an XML parser). We didn’t link an entire linear algebra library just to get axpy and a Thomas solver–it wouldn’t have been optimized for our system anyway. We implemented what we needed of the freely available .mat file specification, rather than requiring a separate library for that. (We have used these matlab read/write routines in house for several years.)

Similarly, we stuck to a very simple mesh data structure so we wouldn’t have to maintain compatibility with general mesh libraries (which can tend to favor feature sets and generality over performance and simplicity). Rather than use general-purpose ODE solvers (with yet more library dependencies, and more work for maintaining compatibility), we wrote simple solvers tailored specifically to our equations.

The upshot of this is that you don’t have to do anything fancy to replicate results with BioFVM. Just grab a copy of the source, drop it into your project directory, include it in your project (e.g., your makefile), and you’re good to go.

All the juicy details

The Bioinformatics paper is just 2 pages long, using the standard “Applications Note” format. It’s a fantastic format for announcing and disseminating a piece of code, and we’re grateful to be published there. But you should pop open the supplementary materials, because all the fun mathematics are there:

- The full details of the numerical algorithm, including information on our optimizations.

- Convergence tests: For several examples, we showed:

- First-order convergence in time (with respect to Δt), and stability

- Second-order convergence in space (with respect to Δx)

- Accuracy tests: For each convergence test, we looked at how small Δt has to be to ensure 5% relative accuracy at Δx = 20 μm resolution. For oxygen-like problems with cell-based sources and sinks, Δt = 0.01 min will do the trick. This is about 15 times larger than the stability-restricted time step for explicit methods.

- Performance tests:

- Computational cost (wall time to simulate a fixed problem on a fixed domain size with fixed time/spatial resolution) increases linearly with the number of substrates. 5-10 substrates are very feasible on desktop workstations.

- Computational cost increases linearly with the number of voxels

- Computational cost increases linearly in the number of cell-based source/sinks

And of course because this code is open sourced, you can dig through the implementation details all you like! (And improvements are welcome!)

What’s next?

- As MultiCellDS (multicellular data standard) matures, we will implement read/write support for <microenvironment> data in digital snapshots.

- We have a few ideas to improve the speed of the cell-based sources and sinks. In particular, switching to a higher-order accurate solver may allow larger time step sizes, so long as the method is still stable. For the specific form of the sources/sinks, the trapezoid rule could work well here.

- I’d like to allow a spatially-varying diffusion coefficient. We could probably do this (at very great memory cost) by writing separate Thomas solvers for each strip in x, y, and z, or by giving up the pre-computation part of the optimization. I’m still mulling this one over.

- I’d also like to implement non-Cartesian meshes. The data structure isn’t a big deal, but we lose the LOD optimization and Thomas solvers. In this case, we’d either use explicit methods (very slow!), use an iterative matrix solver (trickier to parallelize nicely, except in matrix-vector multiplication operations), or start with quasi-steady problems that let us use Gauss-Seidel iterative type methods, like this old paper.

- Since advective flow (particularly interstitial flow) is so important for many problems, I’d like to add an advective solver. This will require some sort of upwinding to maintain stability.

- At some point, we’d like to port this to GPUs. However, I don’t currently have time / resources to maintain a separate CUDA or OpenCL branch. (Perhaps this will be an excuse to learn Julia on GPUs.)

Well, we hope you find BioFVM useful. If you give it a shot, I’d love to hear back from you!

Very best — Paul

Paul Macklin profiled in New Scientist article

Paul Macklin was recently featured in a New Scientist article on multidisciplinary jobs in cancer. It profiled the non-linear path he and others took to reach a multi-disciplinary career blending biology, mathematics, and computing.

Read the article: http://jobs.newscientist.com/article/knocking-cancer-out/ (Apr. 16, 2015)

Banner and Logo Contest : MultiCellDS Project

As the MultiCellDS (multicellular data standards) project continues to ramp up, we could use some artistic skill.

Right now, we don’t have a banner (aside from a fairly barebones placeholder using a lovely LCARS font) or a logo. While I could whip up a fancier banner and logo, I have a feeling that there is much better talent out there. So, let’s have a contest!

Here are the guidelines and suggestions:

- The banner should use the text MultiCellDS Project. It’s up to artist (and the use) whether the “multicellular data standards” part gets written out more fully (e.g., below the main part of the banner).

- The logo should be shorter and easy to use on other websites. I’d suggest MCDS, stylized similarly to the main banner.

- Think of MultiCell as a prefix: MultiCellDS, MultiCellXML, MultiCellHDF, MultiCellDB. So, the “banner” version should be extensible to new directions on the project.

- The banner and logo should be submitted in a vector graphics format, with all source.

- It goes without saying that you can’t use clip art that you don’t have rights to. (i.e., use your own artwork or photos, or properly-attributed creative commons-licensed art.)

- The banner and logo need to belong to the MultiCellDS project once done.

- We may do some final tweaks and finalization on the winning design for space or other constraints. But this will be done in full consultation with the winner.

So, what are the perks for winning?

- Permanent link to your personal research / profession page crediting you as the winner.

- A blog/post detailing how awesome you and your banner and logo are.

- Beer / coffee is on me next time I see you. SMB 2015 in Atlanta might be a good time to do it!

- If we ever make t-shirts, I’ll buy yours for you. :-)

- You get to feel good for being awesome and helping out the project!

So, please post here, on the @MultiCellDS twitter feed, or contact me if you’re interested. Once I get a sense of interest, I’ll set a deadline for submissions and “voting” procedures.

Thanks!!

2015 Speaking Schedule

Here is my current speaking schedule for 2015. Please join me if you can!

- Feb. 13, 2015: Seminar at the Institute for Scientific Computing Research, Lawrence Livermore National Laboratory (LLNL)

- Title: Scalable 3-D Agent-Based Simulations of Cells and Tissues in Biology and Cancer [abstract]

See Also: